Let’s break it down.

🎨 1. The Prompt-to-Image Pipeline

At the core of the Ghibli AI Studio is likely a diffusion-based image generation model. These models are trained to generate high-quality images from noise — literally. Here's the basic process:

1. Noise Input: The model starts with a random field of pixels (aka noise).

2. Prompt Interpretation: The text prompt ("make this look like a Ghibli background") is embedded and processed.

3. Diffusion Steps: Over a series of iterations, the model denoises the image to reveal something that aligns with both the initial noise pattern and the input prompt.

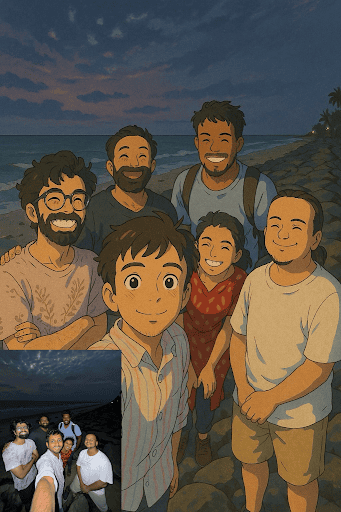

4. Stylization Layer: If the user provides a photo, it’s likely passed through a style transfer or image-to-image model that maps features of the input image onto a Ghibli-inspired aesthetic.

🎮 2. Style Transfer: The Ghibli Look

Creating the signature Ghibli feel isn’t just about pastel skies and soft lighting. It involves:

• Color Palette Mapping: The model likely adjusts hues, brightness, and contrast to match typical Ghibli tones (earthy colors, watercolor-like textures, and soft shading).

• Edge Simplification: Details like facial features and landscapes are stylized to resemble 2D animation, with strong outlines and flat color fields.

• Texture Simulation: Some models simulate brushstroke or pencil textures to give that hand-drawn feeling.

These could be achieved using a model like ControlNet for guided image generation, combined with a LoRA (Low-Rank Adaptation) fine-tuned on Ghibli-style datasets.

🧠 3. The Role of GPT-4o

While GPT-4o (Omni) is best known for its text and multimodal understanding, it acts as the orchestrator in this system:

• It interprets the user prompt and image.

• It determines whether to generate a new image from scratch or apply a transformation to an uploaded photo.

• It likely calls on a specialized image generation model (like DALL·E 3 or another diffusion model) with custom fine-tuning on Ghibli-style datasets.

🤫 4. Training Data: The Elephant in the Room

The AI’s ability to mimic the Ghibli style so convincingly suggests that it was trained or fine-tuned on a large set of images inspired by (or closely resembling) Studio Ghibli’s work. Whether or not these datasets include actual frames from Ghibli films is a matter of ethical and legal concern — one that has already sparked industry-wide debate.

🌍 5. User Experience and Delivery

From the user’s perspective, it’s seamless:

• Upload a photo or provide a description.

• Prompt the model (“turn this into a Ghibli-style landscape\”).

• Get a stunning result in seconds.

Behind the scenes, this involves complex:

• Prompt parsing

• Image preprocessing (resizing, normalization)

• Model selection

• Postprocessing (upsampling, stylization filters)

• Caching or content moderation before delivery

ChatGPT’s Ghibli-style image generation is a technical marvel — it compresses years of hand-crafted animation style into an instant, AI-powered experience. But it also raises big questions around originality, copyright, and the role of human artists in a world where AI can mimic even the most iconic styles.

As this technology evolves, understanding the magic behind the curtain helps us appreciate both its power and the responsibilities that come with it.

Share via: